Overview

- When I was working on my master’s thesis at ETH Zürich, I started wondering about the different ways in which large language models could be used. To what extent can they “think” and explore new topics? Also, could they aid human thinking?

- To find out, I decided to build a new user interface for ChatGPT, designed for exploring new topics. My goal was to make it visually appealing and shift the burden of thinking to the language model, the user only having to point it in the right direction.

- I created the user interface using Vue.js and the diagramming library D3. The UI is node-based and uses a force simulation. Furthermore, I used a serverless architecture to deploy a relay to the OpenAI API (to keep my API key secret).

- Overall, this was a fun experiment. The node-based UI together with the force simulation makes for an engaging user experience and it can actually help explore new topics! This article shows how ThinkBot works.

Context

- 🗓️ Timeline: One weekend in 04/2023

- 🛠️ Project Type: Side project

- ▶️ Live Demo: ThinkBot

Technologies

- Frontend: TypeScript, Vue, D3 (force simulation), Tailwind

- Frontend hosting: Vercel

- OpenAI Api wrapper hosting: Cloudflare workers (serverless function)

- Large Language Model (LLM): OpenAI’s gpt-3.5-turbo

Details & Impressions

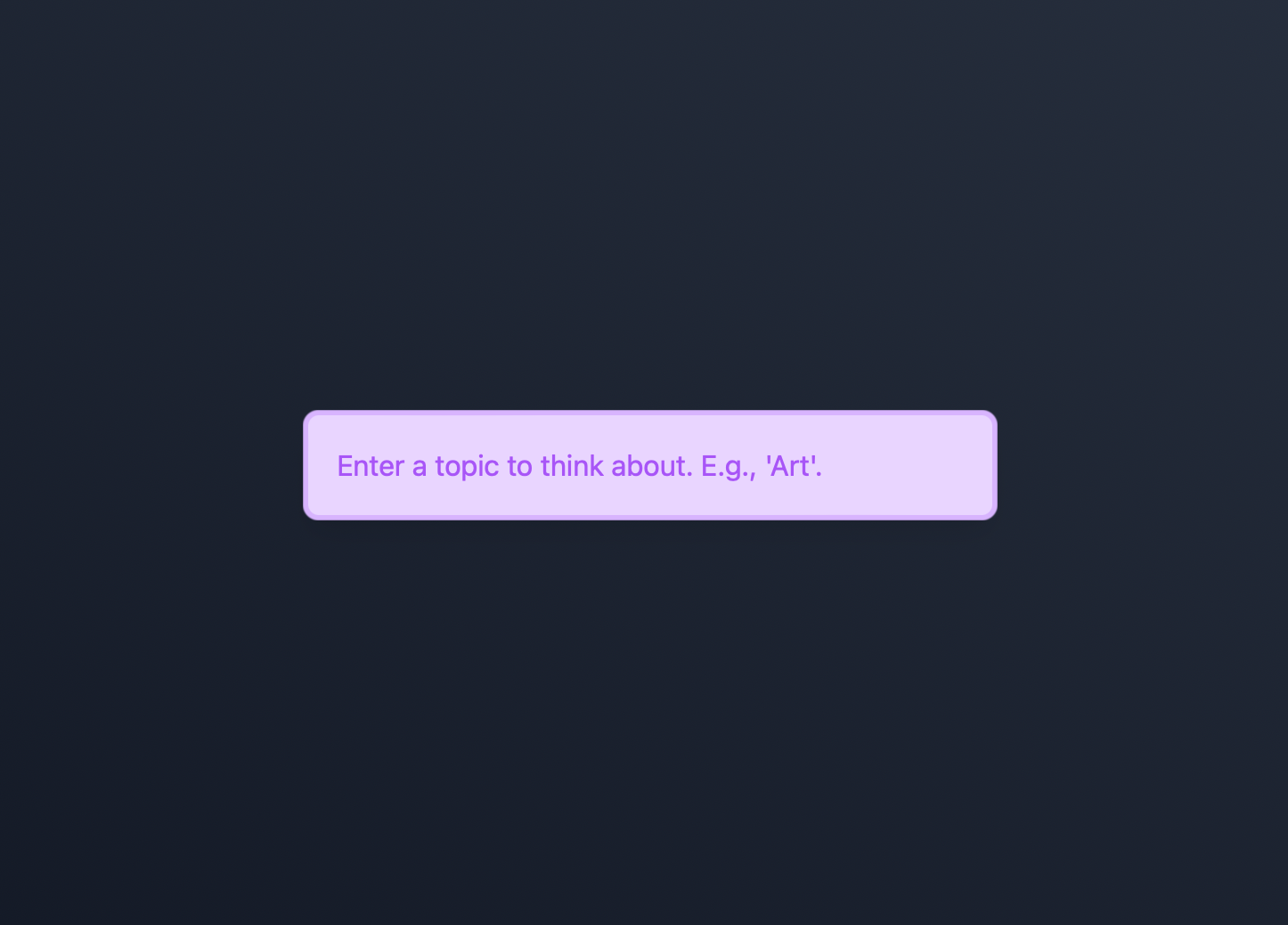

What happens when AI is used to create questions about a topic? And what happens if AI is also used to answer these questions?

The project ThinkBot explores this idea. You start on an empty canvas and enter a topic to “think” about:

What follows is a series of questions and answers, generated by a language model and guided by you.

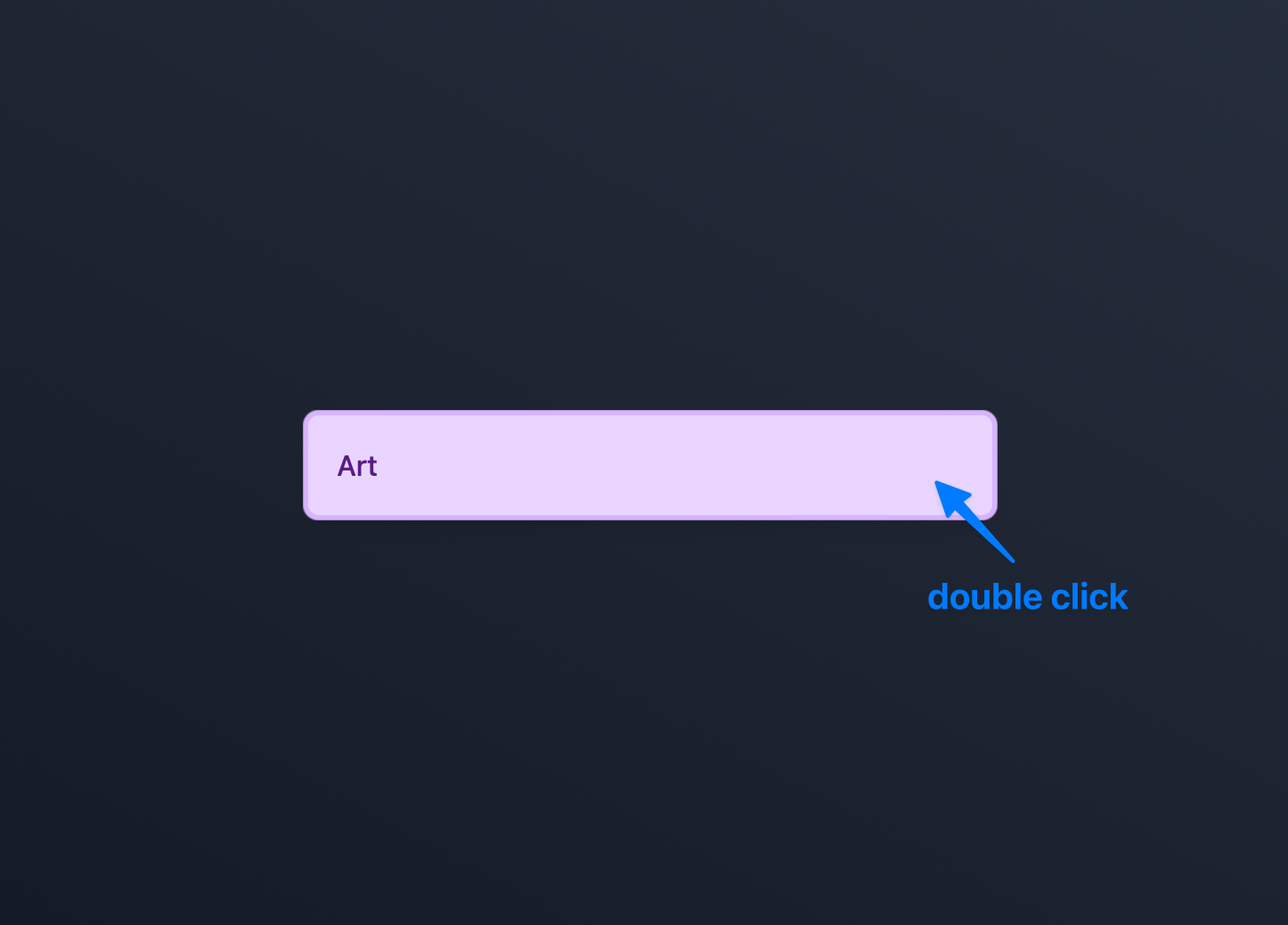

As an example, let’s say we want to explore the concept “Art”. We can do so by entering the term and double-clicking.

Doing so will generate a question (blue box):

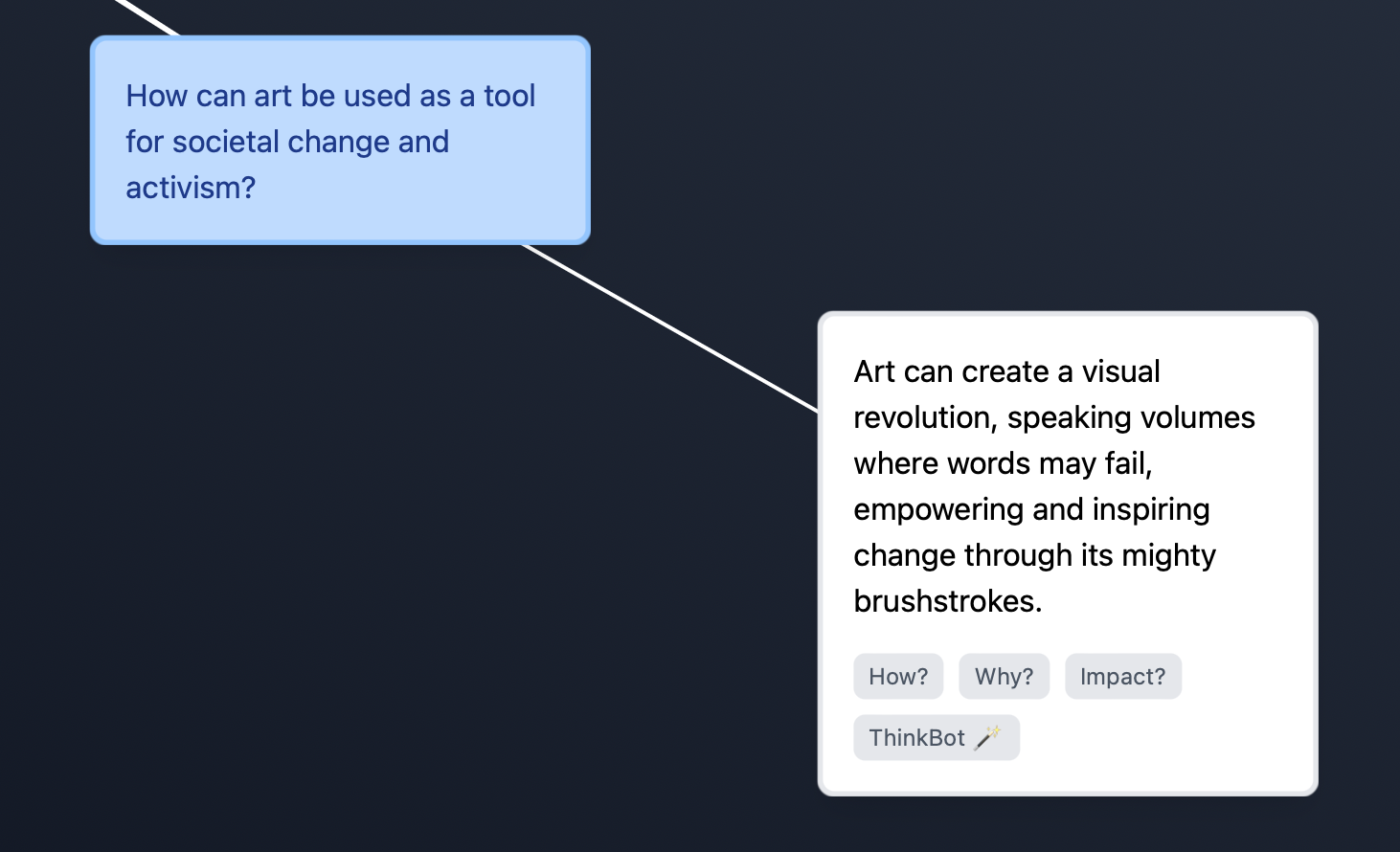

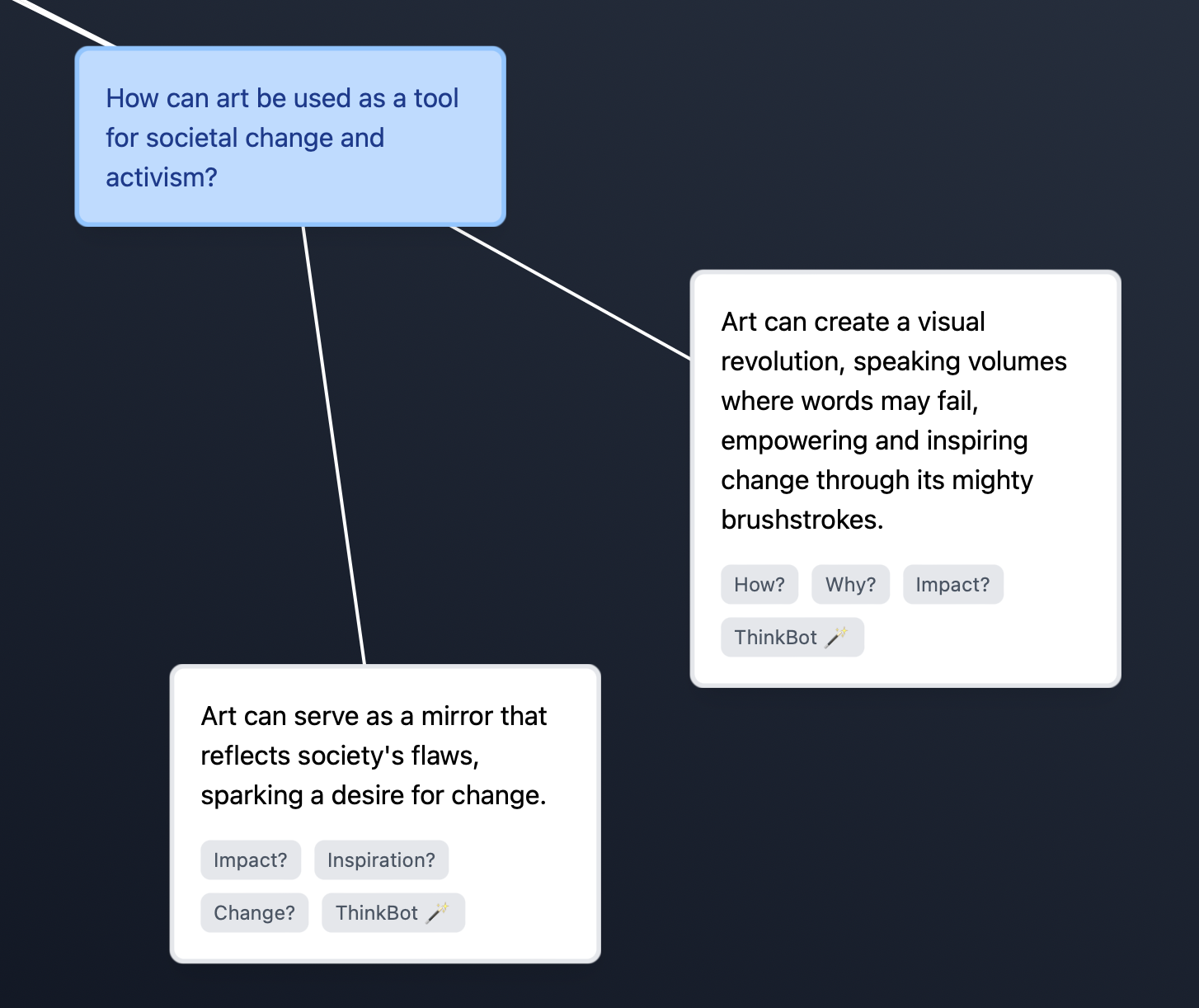

If we think this question is interesting, we can explore this question further by double-clicking it. This will generate an answer (white box) to the question:

If we’re not happy with the answer, we can generate another one by double-clicking the question again:

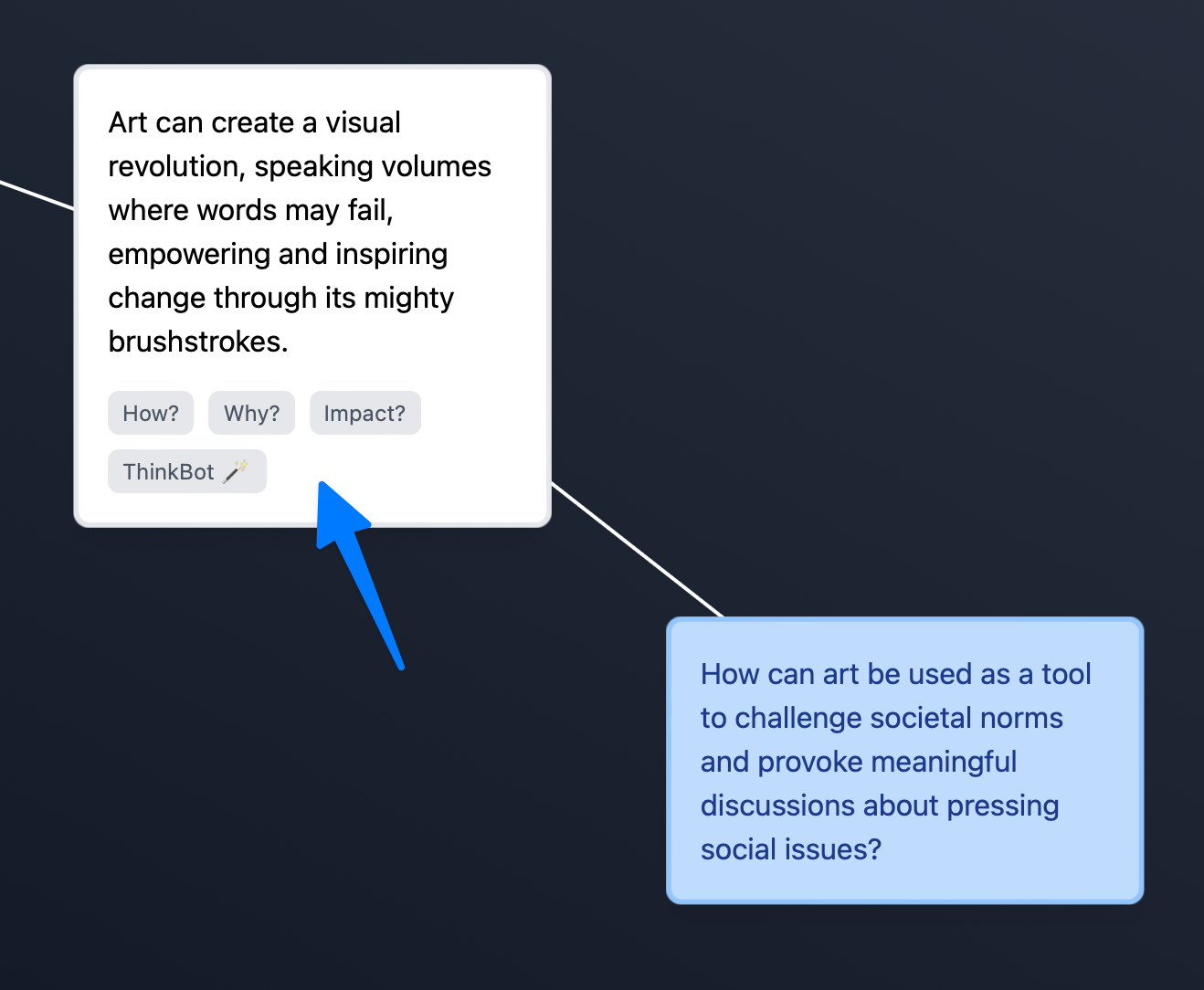

We can explore an answer further by generating a related question. Again, this can be done by double-clicking an answer:

This process can be repeated indefinitely. As a result, you get an almost automatic thought process in which you only have decide the direction of thought. The machine thinks for you but you still decide which chain of thought is worth exploring.

The following video (30s) shows this process in motion:

As you can see, ThinkBot follows two very simple rules:

- If you double-click a question, it will generate a response.

- If you double-click a response, it will generate a related question, exploring that topic further.

At each step, you can decide which idea to explore next.

So… Can It Help Explore New Topics?

I would say it can definitely help, but it can’t fully replace thinking. It could be great for exploring a topic that is new to you but known by others. As long as the language model “knows” something about the topic, meaning that it saw this topic during training, it can help you explore that topic. However, for truly novel ideas, I doubt that it would work well, as it would be out of distribution for the language model.

Either way, I’ll let you be your own judge. You can try the app out here:

How ThinkBot Was Made

The system can roughly be split into two parts: the user interface, and the content generation.

User Interface

My goal was to make ThinkBot feel dynamic — just like thought itself. It should not just be a bulleted list, it must feel alive. The simplest alternative to a list is a mind-map, but even a mind-map would have been too static. Thus, I added movement, more precisely, a force simulation.

In simple terms, this means that the positions and velocities of the mind-map nodes are dynamically computed, according to different forces in the system: Nodes repel each other and links pull connected nodes together.

In addition, the generated content is streamed directly into the mind-map nodes, which adds another dimension of movement.

Together, this makes ThinkBot feel more “alive”.

Content Generation

The content is created using a large language model (LLM). To be precise, it is generated by OpenAI’s gpt-3.5-turbo, the same model that also powers ChatGPT (as of early 2023).

When creating an answer to a question, the LLM is instructed to create original, non-obvious answers. As context, it is supplied with the question and with all previously generated answers to that question.

Questions are created in a similar way. Again, the LLM is instructed to create non-obvious questions.

Finding a good prompt (input to the LLM) as well as good settings for the token decoding step took some experimentation. ThinkBot uses the LLM with a high ‘temperature’, meaning that there is more randomness during the next token prediction. This makes the output more interesting and less predictable, which is essential here.

Because the client application running in the browser cannot directly communicate with the LLM without exposing a private API key, I wrote a small serverless function which forwards requests from the client to OpenAI servers and pipes the response back to the client.